|

I am joining McGill University as an Assistant Professor and MILA as a Core Adacemic Member. McGill University ranked top 30 worldwide according to QS Ranking 2024. Our lab studies Large Language Models, Trustworthy AI, and Embodied AI. Please apply to both 1) MILA and 2) McGill ECE and mention my name in your application. The deadline for McGill is Dec 15. Due to the high inquiries, I may not be able to respond to individual email. If you are interested in working with me, please fill this form. I finished my Ph.D. at the Department of Computer Science at Columbia University, where I work on computer vision and robust machine learning. I am fortunate to be advised by Prof. Junfeng Yang and Prof. Carl Vondrick. I did my bachelors at Tsinghua University advised by Prof. Yuan Shen. I was a visiting student at MIT advised by Prof. Dina Katabi. Email / CV / Google Scholar / Twitter |

|

|

[2024 Feb]: One paper on diffusion generation is accepted by CVPR 2024 as Highlight (top 2%). [2024 Jan]: Two papers are accepted by ICLR 2024. [2023 Sep]: Our paper on Convolutional Visual Prompt is accepted by NeurIPS 2023. [2023 Oct]: Our paper on Causal Learning for Program Analysis is accepted by ICSE 2024. Robust ML for another real-world application. [2023 Sep]: I become a Core Adacemic Member in MILA, Quebec AI Insititute, the AI institute led by Yoshua Bengio who won the deep learning Turing Award. <[2023 July]: Our paper on fast test-time optimiztion is accepted by ICCV 2023. [2023 July]: I become Dr. Mao! I thank my advisors Carl Vondrick and Junfeng Yang, as well as David Blei, Richard Zemel, and Hao Wang for serving my thesis committee. [2023 April]: Our paper on equivariance for robustness is accepted by ICML 2023. [2023 March]: Two papers are accepted by CVPR 2023. [2023 Feb]: One paper is accepted by ICLR 2023. 2022: We organized the ICLR 2022 Workshop on PAIR^2Struct: Privacy, Accountability, Interpretability, Robustness, Reasoning on Structured Data . 2022: I did a summer internship at Microsoft Research on Video Perception. 2021: I did a summer internship at Google Research on Vision Transformer, check our ICLR paper! 2020: I did a summer internship at Waymo, where I worked on 3D point cloud and detection. I'm interested in robustness and open-world generalization of machine learning and computer vision models, my work uses 1) intrinsic structure from natural data and 2) extrinsic structure from domain knowledge to robustify perception. Representative papers are highlighted. |

|

|

Haozhe Chen, Carl Vondrick, Chengzhi Mao arXiv, 2024 (New) arXiv / project page / code . |

|

Chengzhi Mao, Carl Vondrick, Hao Wang, Junfeng Yang ICLR, 2024 (New) arXiv / dataset / code . |

|

Haozhe Chen, Junfeng Yang, Carl Vondrick, Chengzhi Mao ICLR, 2024 (New) arXiv / dataset / code . |

|

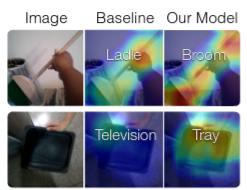

Chengzhi Mao, Revant Teotia, Amrutha Sundar, Sachit Menon, Junfeng Yang, Xin Wang, Carl Vondrick CVPR, 2023 (New) arXiv / dataset / code We propose a ``doubly right'' object recognition benchmark, which requires the model to simultaneously produce both the right labels and the right rationales. State-of-the-art vision-language models, such as CLIP, often provide incorrect rationales for the categorical predictions. By transferring the rationales from language models to visual representations, we show we can learn a why prompt that adapts CLIP to produce correct rationales. |

|

Yun-Yun Tsai*, Chengzhi Mao*, Junfeng Yang NeurIPS, 2023 (New) arXiv / dataset / code We propose a simple and effective convolution visual prompt for adapting visual foundation models. By adapting it at inference time, our CVP can reverse a lot of corruptions in the input image, producing robust visual perception. |

|

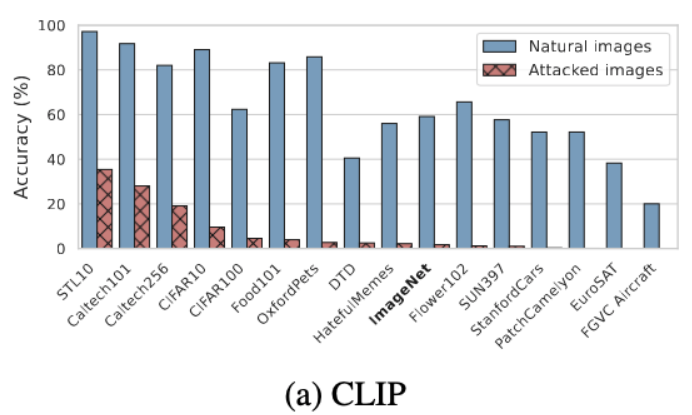

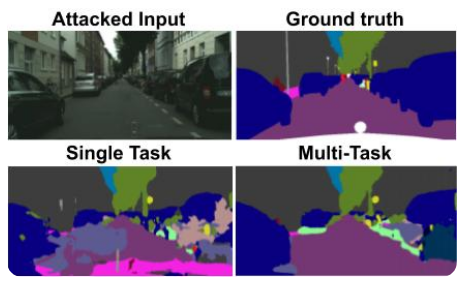

Chengzhi Mao*, Scott Geng*, Junfeng Yang, Xin Wang, Carl Vondrick ICLR, 2023 (New) arXiv / code / Existing adversarial training model only obtain adversarial robustness on the tasks they are trained on. We introduce a new task ``zero-shot adversarial robustness'', where the model needs to be adversarially robust on unseen tasks and datasets. We find the popular CLIP model fails to be adversarially robust. We then instruct the CLIP model to be robust with a robust prompt or finetuning, demonstrating zero-shot robustness transfers to unseen tasks. The key is to use the right language supervision in the learning objective. |

|

Ruoshi Liu, Chengzhi Mao, Purva Tendulkar, Hao Wang, Carl Vondrick ICCV, 2023 (New) arXiv / blogpost One major bottleneck for applying test-time optimization is the speed of convergence. We introduce a framework that accelerate test-time inference through amortization. Our method can improve the test-time optimization speed on GAN-inversion, adversarial defense, and 3D human pose estimation, by up to 100 times. |

|

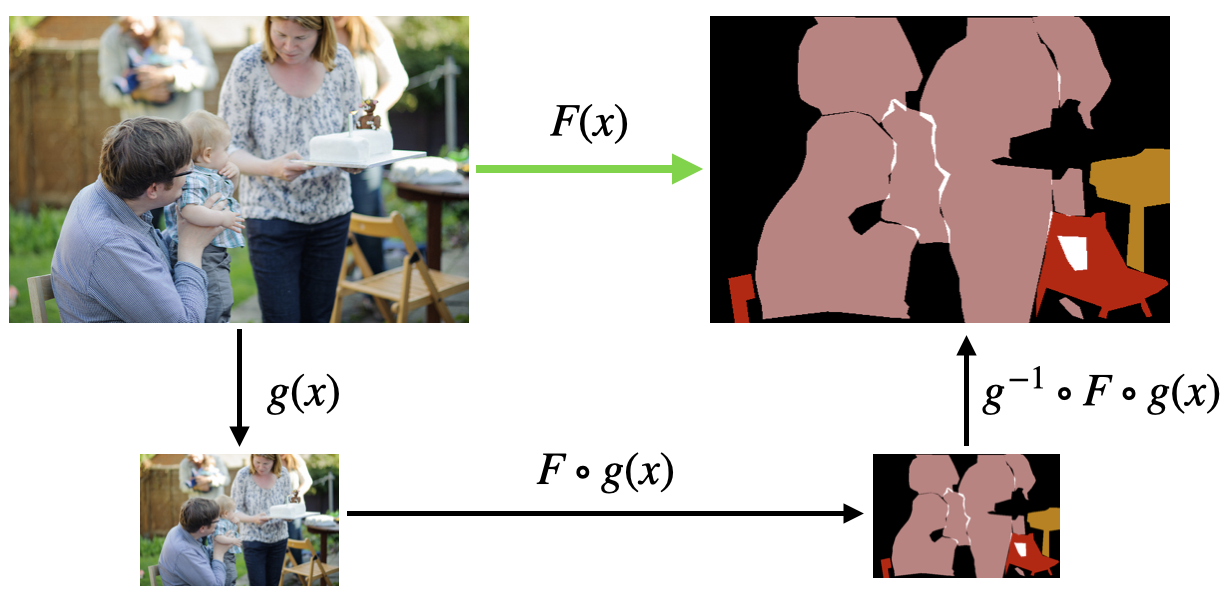

Chengzhi Mao, Lingyu Zhang, Abhishek Joshi, Junfeng Yang, Hao Wang, Carl Vondrick ICML, 2023 (New) arXiv / webpage / code We introduce a framework that uses the intrinsic equivariance constraints in natural images to robustify inference. |

|

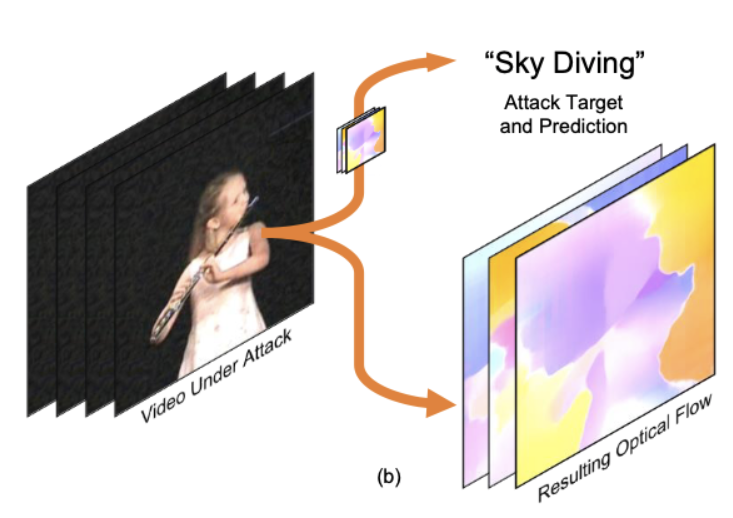

Lingyu Zhang*, Chengzhi Mao*, Junfeng Yang, Carl Vondrick Preprint, 2022 (New) arXiv / Page / code / cite / talk Robust video perception is challenging due to the high-dimensional input. Yet video is also highly structured. We introduce a framework that robustify video perception by respecting motion structure at inference time. |

|

Ruoshi Liu, Sachit Menon, Chengzhi Mao, Dennis Park, Simon Stent, Carl Vondrick CVPR, 2023 (New) arXiv / blogpost / cite / talk We use inference time optimization to recover the 3D shape of the object with only shadow, even if the object is occluded. |

|

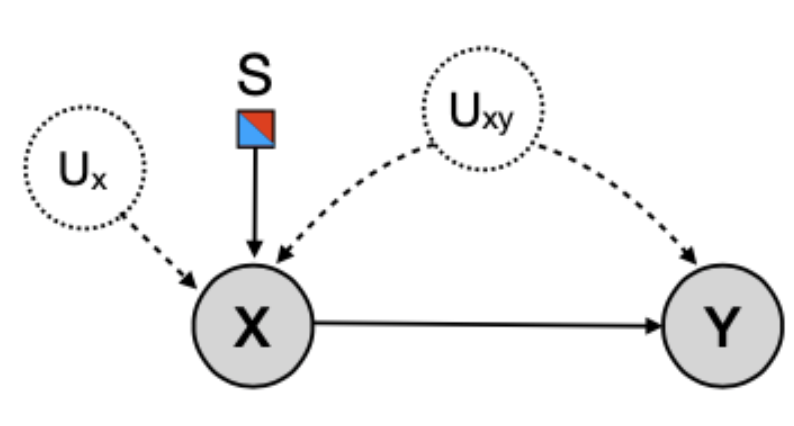

Chengzhi Mao*, Kevin Xia*, James Wang, Hao Wang, Junfeng Yang, Elias Bareinboim, Carl Vondrick CVPR, 2022 arXiv / code / cite / talk We show that the causal effect is transportable across domains in visual recognition. Without observing addition variables, we show that we can derive an estimand for the causal effect using representations in deep models as proxies. Restuls show that our approach captures causal invariances and improves generalization. |

|

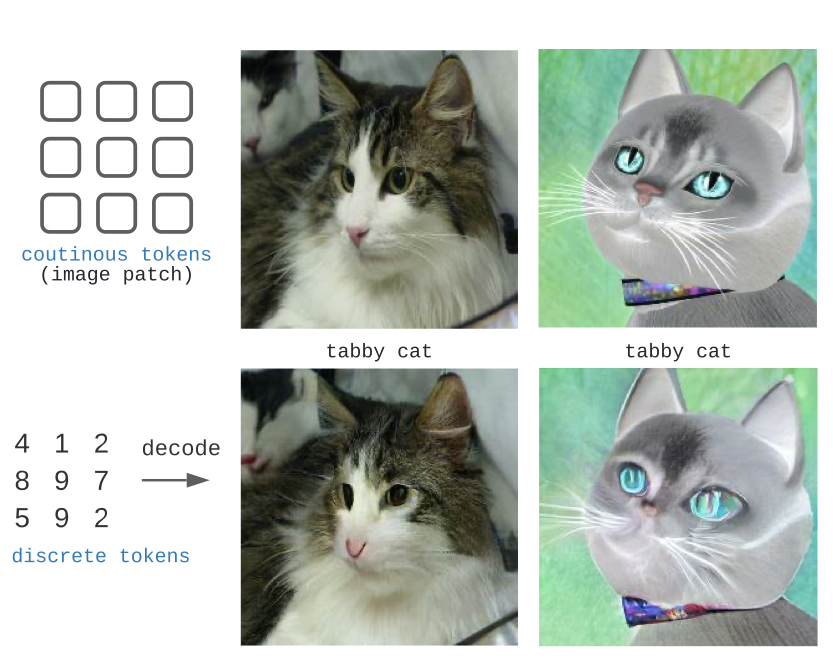

Chengzhi Mao, Lu Jiang, Mostafa Dehghani, Carl Vondrick, Rahul Sukthankar, Irfan Essa ICLR, 2022 arXiv / code / cite / talk We find that ViTs are overly reliant on local features instead of global context. We propose a simple and effective modification to ViT's input layer by adding discrete tokens from a vector-quantized encoder. Discrete tokens contain less information individually, promoting ViT to learn global information which is invariant. Our modification improves up to 12% on out-of-distribution generalization. |

|

Mia Chiquier, Chengzhi Mao, Carl Vondrick ICLR, 2022, (Oral) arXiv / code / cite / Science / talk Standard adversarial attacks are not effective in real-time streaming situations because the characteristics of the signal will have changed by the time the attack is executed. We introduce predictive attacks, which achieve real-time performance by forecasting the attack that will be the most effective in the future. |

|

Chengzhi Mao, Mia Chiquier, Hao Wang, Junfeng Yang, Carl Vondrick ICCV, 2021 arXiv / code / cite / talk We find that adversarial attack for image classification also collaterally disrupt incidental structure in the image. By modifying the input image to restore the natural structure of the image, we can reverse adversarial attacks for defense. |

|

Chengzhi Mao, Augustine Cha*, Amogh Gupta*, Hao Wang, Junfeng Yang, Carl Vondrick CVPR, 2021 paper / arXiv / code / talk / cite Discriminative models often learn naturally occurring spurious correlations, which cause them to fail on images outside of the training distribution. We introduce a framework for learning robust visual representations that are more consistent with the underlying causal relationships. |

|

Chengzhi Mao, Amogh Gupta*, Vikram Nitin*, Baishakhi Ray, Shuran Song, Junfeng Yang, Carl Vondrick ECCV, 2020 (Oral Presentation) arXiv / oral talk video / long video / code / cite What causes adversarial vulnerabilities? Our work shows that deep networks are vulnerable to adversarial examples partly because they are trained on too few tasks. |

|

Guangyu Shen, Chengzhi Mao, Junfeng Yang, Baishakhi Ray ECCV Workshop: Adverasrial Robustness in the Real World, 2020 arXiv / conference page Learning nuisance transformations in a conditional GAN that fools the STOA adversarial robust segmentation models in one step. |

|

Robby Costales, Chengzhi Mao, Raphael Norwitz, Bryan Kim, Junfeng Yang CVPR Workshop on Adversarial Machine Learning in Computer Vision, 2020 arXiv / Adding backdoor to Live Neural Network Model by migrating only a few weights. |

|

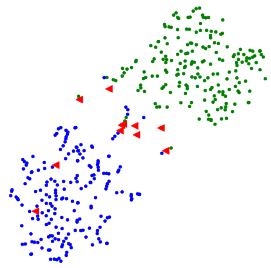

Chengzhi Mao, Ziyuan Zhong, Junfeng Yang, Carl Vondrick, Baishakhi Ray NeurIPS, 2019 arXiv / code / cite We increase the robustness of classifiers by regularizing the representation space under attack with metric learning. The key is to select the proper triplet of data. |

|

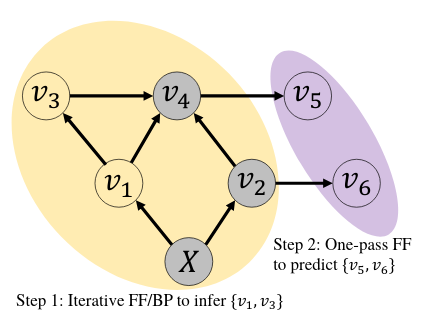

Hao Wang, Chengzhi Mao, Hao He, Mingmin Zhao, Tommi S. Jaakkola, Dina Katabi AAAI, 2019 MIT News / arXiv / We propose a bidirectional probabilistic neural network that performs bidirectional inference, demonstrate it's capabilities on high dimentional data on EEG, ECG, and breathing signals. |

|

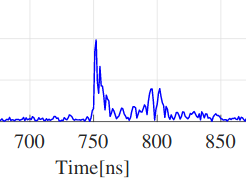

Chengzhi Mao, Kangbo Lin, Tiancheng Yu, Yuan Shen 2018 IEEE Global Communications Conference, 2019 Paper Link / code / cite We propose a probabilistic neural network framework that correct the UWB ranging error and produce uncertainty estimation for the correction. |

|

Reviewer: ICLR 2020, 2021, NeurIPS 2020, AAAI 2021, CVPR 2021, ICML2021, ICCV 2021 Teaching Assistant: Security and Robustness of Machine Learning Teaching Assistant: Computer Vision (II) Students I advised: Amogh Gupta (now at Amazon Research), Guangyu Shen (PhD student at Prudue), Robby Costales (PhD student at USC), Augustine Cha, Cynthia Mao (undergraduate), James Wang (undergraduate), Bennington Li (undergraduate), Revant Teotia, Lingyu Zhang, Abishek Joshi, Matthew Lawhon (undergraduate), Scott Geng (undergraduate), Tony Chen (undergraduate), Noah McDermott, Amrutha Sundar I thank the template from Jon Barron |