[Previous][Up][Next]

Localized Men on Mission Strategy

This strategy attempted to improve on the Men on Mission player from

last year and was successful in doing so in a variety of game

configurations. The strategy takes advantage of the fact that robots

are more effective at creating rectangles close to their current

position rather than drawing ones farther away. This notion led to a

localized rectangle searching algorithm that is much more focused and

efficient than a randomized search or a brute force search.

I. Basic Algorithm and Analysis

Here is some pseudocode explaining the algorithm:

Compute: maxDelta = n * RISKINESS

For each robot r with position p, perform:

- If the robot is already building a rectangle R,

continue building

it, unless its associated score S(R) (explained below)

drops down to zero.

- Otherwise, iterate over all rectangles with one vertex at

p, such

that the rectangle's length and width is at least three, and the

perimeter does not lie on filled cells, and the length and width are

both bounded by maxDelta.

- For each rectangle found matching the above criteria, count

the number of unfilled cells on the interior. This count will be

the score S(R) for that rectangle.

- Save the coordinates of the rectangle R with the

highest score.

- If the highest scoring rectangle has a score of at least one,

build a state machine for the robot, instructing it to

move to the opposite corner of the rectangle, since p is

already one of the corners.

- Else, if the highest score is 0, do not draw the

rectangle. Just keep moving.

|

|

|

|

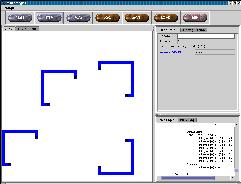

Round 5 |

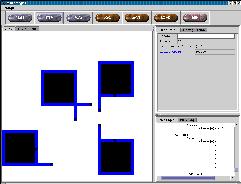

Round 27 |

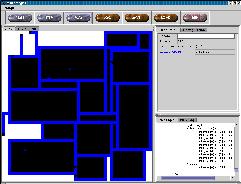

Round 113 |

|

This strategy has several advantages over a randomized search:

- The robots are able to build more quickly since no movement is

required to get into the starting position.

- If a robot has no unfilled cells around it, it will move around

the perimeter of the board until it finds unfilled cells. This means

that the robots will systematically search for free space. At the

same time they will act as guards against the opponent drawing a

rectangle around the entire perimeter, an important defense in a 2

player game.

- The parameterization of the RISKINESS factor makes it

easy to adjust how agressive the player should be. For a more

agressive player this value can be set to 0.5. For a more

conservative player, this value should be less than 2.5.

- Since the robots go for rectangles that are near rectangles they

have already constructed, they are more likely to reuse existing

rectangle borders in building new rectangles. This means that much

of the time, the robot will only have to one or two edges before the

rectangle is completed. Since the score of the rectangle is checked

every time in Step 1, we will stop drawing the rectangle as soon as

it is either complete or no longer viable.

- This strategy is also good at finding the last few free areas in

the later stages of the game. When faced with this considerable

advantage, Group 1 decided to incorporate it into their own player

in order to complement their main strategy. As a result, Group 1

performed as well or better than our player in many games.

One major problem with this approach is that unless an opening is used

where a lot of territory in the middle is taken up, it will be

difficult for the robots to find and claim free space that is not in

their immediate vicinity. Similarly, the algorithm does not guarantee

that the robots will choose the optimal rectangles to draw at any

given time. To address these problems, we began developing a

modification to the player to make it more flexible.

II. Improving the Basic Algorithm

One approach that we liked from the Men on Mission player was its

inherent ability to discover large patches of free space. To mimick

this behaviour we coded the following modification:

- At the start of every turn, sample random locations on the board

and check if those locations are in an area of free space. Give each

location a score based on the number of unfilled cells that are

nearby.

- For each robot:

- If the highest scoring rectangle at our location has score

zero, check the list of board samples.

- Assuming the list is not empty, take the sampled location with

the highest score.

- Build a state machine to instruct the robot to move to that

location.

- Once relocated, the robot should continue with the Basic

Algorithm.

This procedure will effectively for robots in highly filled

locations to move to locations that are more open. Unfortunately, the

robots can be stuck at local minima in terms of rectangle score,

preventing them from taking this alternative route. Secondly, if the

robots do not move along the perimeter, then the player is danger of

losing to the naive shoot-the-moon strategy.

III. Pseudo-cooperative Behaviour

Although the framework we used allows for the creation

of teams of robots employing different strategies, we chose not

concentrate on that aspect for this particular player. Instead this

player was meant to demonstrate our most effective yet stable

offensive strategy. Taking advantage of more complex cooperative or

adaptive behaviour, is discussed in the Strategies Switching section.

However, we did make some modifications to the player so that it

would appear as if the robots cooperated in groups of two. To achieve

this, we initialized the robots in pairs. Each pair acted as a team

such that the first robot would build a rectangle by moving east/west

and then north/south, while the second robot would build in the

opposite order. The effect of this is that the robots would build

rectangles simultaneously as long as they we in the same

location. This strategy was useful in the beginning when fast

expansion is critical.

IV. Tournament Results and Future Work

Here are some highlights from the tournament results for this player:

| Rank |

Number of Robots |

Number of Players |

Board Size |

Comments |

| 1st |

2 |

4 |

40 |

| 1st |

5 |

6 |

50 |

| 2nd |

3 |

6 |

50 |

| 2nd |

2 |

6 |

50 |

| 3rd* |

10 |

2 |

40 |

Highest ranking non-chaser |

| 3rd |

3 |

2 |

50 |

The results show that our player was particularly effective in games

with fewer robots per player and many players. Aside from the two

players that employed a chasing strategy (our newest anti-chasing

implementation was not complete in time for the tournament) our player

was also the best in the more standard 10-robot, 2-player scenario

(*).

We expect that our player's performance in games with many robots will

improve with better cooperation among the robots. Some key features

that are missing include:

- Improved logic for cooperative rectangle tracing

- Optimal rectangle tracing

- Rectangle size vs. risk calculation

- More intelligent anti-chasing algorithm that monitors the length

of its trail. If the length of the trail doesn't change for several

consecutive moves, the robot should stop and wait until the chaser

goes away.